The Challenge

This is a relatively simple challenge. There’s data in Lakehouse A, which resides in Workspace A, that needs to be copied to Lakehouse B, which resides in Workspace B. Not something you’d necessarily need everyday, but when you do, you want something that’s efficient and reliable. We could use a Dataflow Gen2 to copy the data. This option makes sense, but no transformations are needed and may not be the most efficient. A bit like renting a 26′ box truck to move three medium size boxes. We could create a shortcut in Lakehouse B to the table(s) in Lakehouse A. Another good option, but in this challenge the data needs to be copied, a link to that data won’t satisfy the requirement. The Copy Data activity in a Pipeline seems like the most efficient solution, but at the time of this writing, however, the Source dropdown menu in the Copy Data activity only allow us to select data sources in the same workspace as the data pipeline or sources configured through the Power BI gateway. Hmmm… seems like one of those features that should exist but doesn’t that I was wrote about in my previous post. Fortunately, a solution does exist that will allow us to leverage the Copy Data activity!

The Solution

I came across this solution in the Fabric blog. We can “hardcode” the Workspace and Lakehouse IDs where the source data resides into the pipeline Copy Data activity rather than rely what populates in the dropdown menus. Here’s the steps to implement this solution:

1. Get the Workspace ID and Lakehouse ID of the source data

- To find the Workspace ID, navigate to the workspace where your source lakehouse resides. The workspace ID can be found in the URL, “https://app.fabric.microsoft.com/groups/[WORKSPACE ID]”. In my environmnt, the Workspace ID is a mix of 36 letters, numbers and dashes.

- To find the Lakehouse ID, open the lakehouse that contains your source data. Again the lakehouse ID can be found in the URL, “https://app.fabric.microsoft.com/groups/[WORKSPACE ID]/lakehouses/[LAKEHOUSE ID]/…” Again, the Lakehouse ID is a mix of 36 letters, numbers and dashes.

2. Create or open a Data pipeline

3. Add a Copy Data activity to the canvas

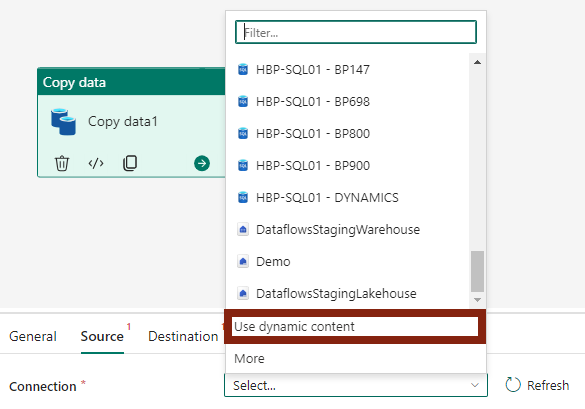

4. Under the Source tab, open the Connection drown down menu and choose Use dynamic content

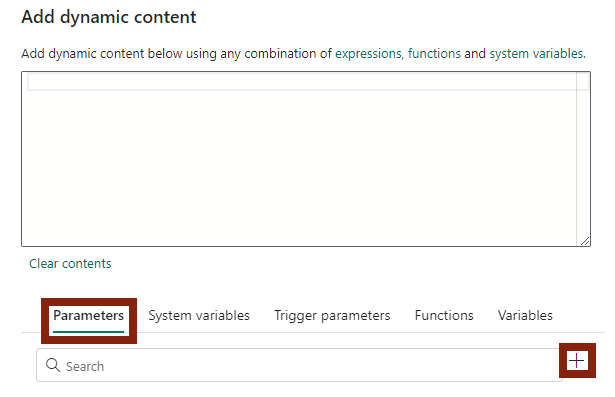

5. When the Add dynamic content pane opens, choose Parameters and click the + (plus) icon to create a new parameter

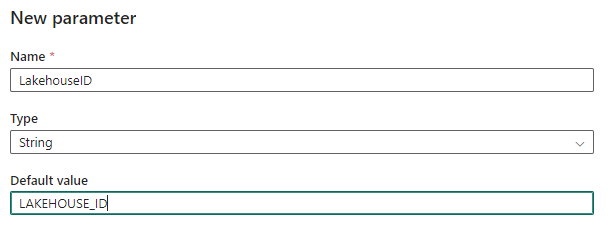

6. Configure the parameter to hold the Lakehouse ID that your grabbed in step 1

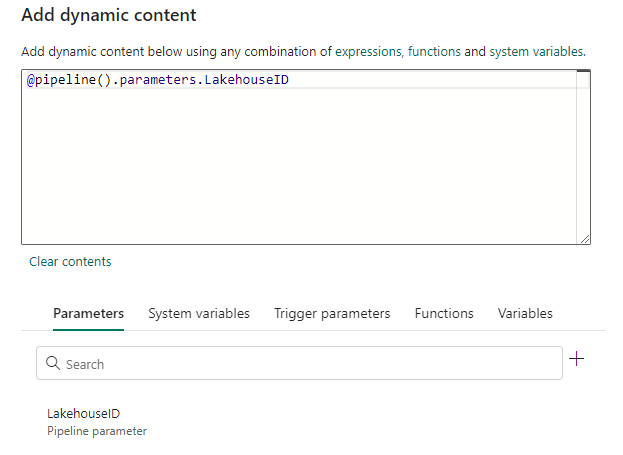

7. Back in the Add dynamic content pane, choose the LakehouseID parameter that you just created to populate the dynamic content input box. Click OK

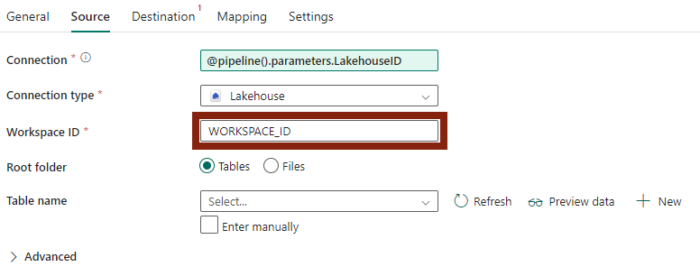

8. Back in the Source tab, paste the Workspace ID that you grabbed in step 1 to the Workspace ID input box.

9. Use the Table name dropdown menu to select the table containing the source data you want to copy

10. Configure the destination lakehouse in the Destination tab. If you’re destination lakehouse is in the same workspace as your data pipeline, you’ll be able to use the dropdown menus to make your selections. If your destination lakehouse is in a different workspace from your data pipeline, follow these steps to point the Copy Data activity to a lakehouse in a different workspace.

11. Save and run your pipeline