I recently embarked on a large project to build out a data lake for my company. This is uncharted territory for me. Prior to the project start my knowledge of Python, Delta Lake, and Apache Spark was, well, basically none. My background is in BI and analytics not in data engineering, though I have been looking to expand my skills and experience in this area. I’m blessed to have worked with great data engineers and have learned a lot from partnering with them, but the time has come to step out and start building, both the lake and my skillset!

My company is already very invested in Power BI, so we determined to give Fabric a shot by using the free 60 day trial, which over a year later, still has not expired (thanks Microsoft!). After a lot of research on Fabric, and other tools including Snowflake, dbt, Databricks, we chose Fabric. I won’t expand on the details of how we reached our choice in this post, but reach out if you want to chat more about it.

Over the course of this project, I will document and share the journey along with tips, learnings, and links to other content that I’ve found helpful.

Tip #1: Do the Research

Fabric is certainly the cool new kid on the block in the Microsoft BI stack. But that doesn’t necessarily mean it’s right for every company or use case. As of the time of this post, Fabric is still new. It’s buggy. There’s things that seem simple and like they should just work but don’t. Features that seem like they should be available but aren’t or, at least, aren’t yet. Long time Power BI users are used to rapid iterations and releases. Fabric is following the same pattern. This is great as new features and bug fixes can come our way more quickly. However, I have also experienced that these rapid iterations iterations have introduced bugs. Features that worked last week don’t work this week. Those statements aren’t complaints, but are the costs of adopting a young platform. If you need a mature platform, Fabric may not be the best option in it’s current state.

Before you go full steam ahead with Fabric head first, do the homework. Setup a trial license. Throw some test workloads at it. Compare the alternate platforms. Talk to experienced data engineers. Attend user group or conference sessions on these topics to get educated. We did all of these and we’re much more prepared to proceed with Fabric as a result. The research helped us to recognize where Fabric is at, to understand that we’re betting on Microsoft to continuously improve it over time, and that we needed to be ready to be patient during development when the technology took us on unexpected turns.

Tip #2: Plan

Whatever direction you choose to go, take time to gather good requirements. Understand the long view of how your lake. Who will utilize it? What is their comfort level and skillset? What tools are they using? How will the lake grow over time? Who will manage the lake? What is their skillset(s) and experience? What are the performance needs? What expectations do the stakeholders have of the lake There are many more questions. Taking time to identify and understand the key questions for your scenario will go a long way in helping you to architect the right solution.

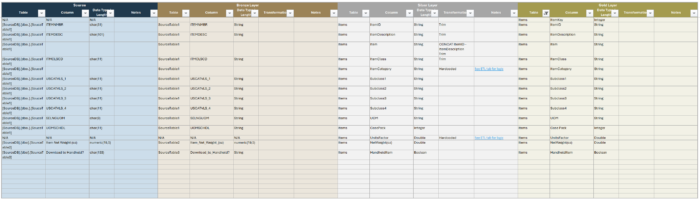

Our team knew early on that we wanted to incorporate a medallion architecture. One of the most beneficial exercises our data team engaged in was to brainstorm the ideal state of our gold level lakehouse. We spent time whiteboarding the necessary fields for analysis and reporting. We determined on consistent naming conventions for our tables and fields. We agreed on formatting for fields in our date table. We considered appropriate granularities of fact tables. There was more, but you get the idea. Every person in the room brought a valuable perspective that is shaping what the final product will look like. And every person brought a perspective that others didn’t consider. By all working together, we ideated a product that will serve as a very solid initial release.

These whiteboard sessions became the basis for our technical documentation. I took the required fields for each table and mapped them back to fields in their respective data sources and noted the transformations that we would need to anticipate at each layer. An example of the documentation is below. Producing this documentation took time up front but is making development time more efficient.

Tip #3: Utilize Microsoft Learn

https://learn.microsoft.com/en-us/training/paths/get-started-fabric

This suggestion is a bit like suggesting that you read the owner’s manual when you buy a new car or appliance. Some people peruse it but most just discard it and dive in. I’ll suggest taking the time to at least glance over these training modules. I found a lot of nuggets here that I wouldn’t necessarily have found on ChatGPT or just venturing into the wild of Fabric on my own. Everything from an introduction to Delta Lake format and how it’s used in Fabric, to sample PySpark scripts, to best practices. Note, these modules won’t go to uber depth on every topic, but they helped me identify where I needed to consult other documentation to round out my thinking. And in many cases the modules contained links to that documentation. Well worth the time spent.

In Conclusion

More to come on as the journey progresses. I plan to include more in depth and technical content in future posts, thank you in advance for journeying with me!

What are you learning in Fabric? What tips and tricks would you like to learn about?

And by the way, in case you’re wondering, we have purchased a Fabric capacity.